Heuristic evaluation

A heuristic evaluation is a usability inspection method for computer software that helps to identify usability problems in the user interface (UI) design. It specifically involves evaluators examining the interface and judging its compliance with recognized usability principles.

The main goal is to identify any problems associated with the design of user interface. Several years of experience in teaching and consulting about usability engineering were the basis of the method developed by Rolf Molich and Jakob Nielsen. Heuristic evaluations are one of the most informal ways of inspecting the human\u2013 computer interaction. Many of the same aspects of user interface design can be covered by many different sets of usability design heuristics. According to their estimated impact on user performance or acceptance, many usability problems are categorized according to a numerical scale. Often the heuristic evaluation is conducted in the context of use cases (typical user tasks), to provide feedback to the developers on the extent to which the interface is likely to be compatible with the intended users’ needs and preferences.

Now we can walkthrough on “Jakob Nielsen’s 10 Usability Heuristics”

- Visibility of System Status

- Match Between System and Real World

- User Control and freedom

- Consistency and Standard

- Error Prevention

- Recognition Rather than recall

- Flexibility and efficiency of use

- Aesthetic and Minimalist design

- Help users recognize, diagnose and recover from errors

- Help and Documentation

Lets get the first and look at it:

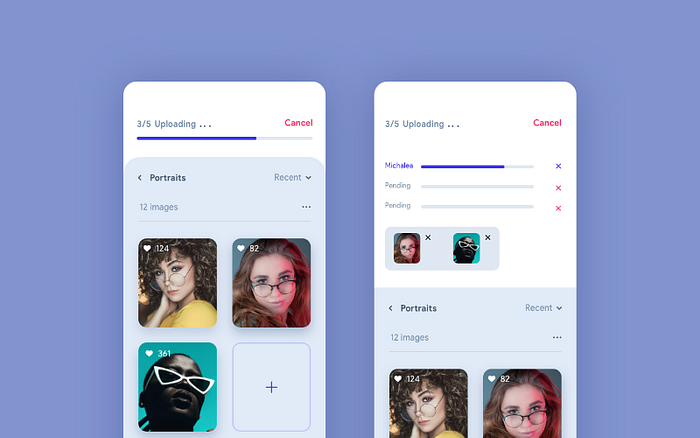

The system should always show the status of an on-going operation to the users until it is done. So the user will get a clear understanding of the progress of that particular process/activity. Never create a situation where the user is completely confused over certain progress/process.

In the above example, the progress bar on top doing the job by indicating 3 out of 5 images are uploaded. Here the user is well aware of the progress and can wait without any hesitation until the process completed. Also, the user can tap on the progress bar and see the detailed upload status view. In a scenario where there are no such indications of progress, the user might get confused and would tap on the back button or reload. We can easily avoid such frustrating situations by making the system status visible.

Walk-Throughs

In software engineering, a walkthrough or walk-through is a form of software peer review “in which a designer or programmer leads members of the development team and other interested parties through a software product, and the participants ask questions and make comments about possible errors, violation of development standards, and other problems”.

“Software product” normally refers to some kind of technical document. As indicated by the IEEE definition, this might be a software design document or program source code, but use cases, business process definitions, test case specifications, and a variety of other technical documentation may also be walked through.

A walkthrough differs from software technical reviews in its openness of structure and its objective of familiarization. It differs from software inspection in its ability to suggest direct alterations to the product reviewed. It lacks of direct focus on training and process improvement, process and product measurement.

A walkthrough may be quite informal, or may follow the process detailed in IEEE 1028 and outlined in the article on software reviews.

Objectives and participants

There are one or two broad objectives in a walkthrough. To get feedback about the technical quality of the document, and/or to educate the audience on the content. The author of the technical document usually directs the walkthrough. Any combination of interested or technically qualified personnel may be included.

IEEE 1028 recommends three specialist roles in a walkthrough:

- The author, who presents the software product in step-by-step manner at the walk-through meeting, and is probably responsible for completing most action items;

- The walkthrough leader, who conducts the walkthrough, handles administrative tasks, and ensures orderly conduct (and who is often the Author); and

- The recorder, who notes all anomalies (potential defects), decisions, and action items identified during the walkthrough meetings.

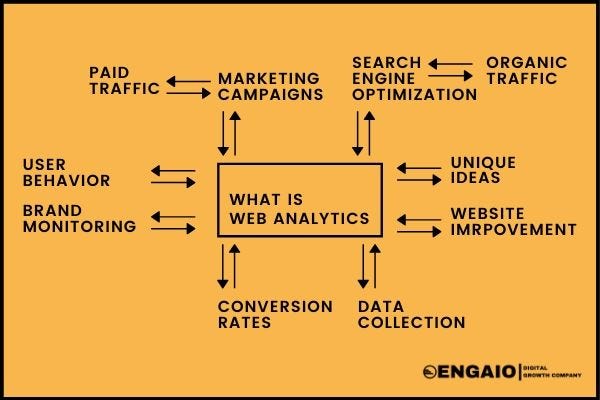

Web Analytics

The measurement, collection, analysis, and reporting of web data is called web analytics. Business and market research can be done using web analytics, which is a process for measuring web traffic but can also be used to assess and improve website effectiveness. Web analytics applications can also help companies measure the results of traditional print or broadcast advertising campaigns. It is possible to estimate how traffic to a website changes after a new advertising campaign is launched. The number of visitors to a website and the number of page views are provided by web analytics. It is useful for market research to gauge traffic and popularity trends.

Basic steps of the web analytics process

Most web analytics processes come down to four essential stages or steps, which are:

- Collection of data: This stage is the collection of the basic, elementary data. Usually, these data are counts of things. The objective of this stage is to gather the data.

- Processing of data into information: This stage usually take counts and make them ratios, although there still may be some counts. The objective of this stage is to take the data and conform it into information, specifically metrics.

- Developing KPI: This stage focuses on using the ratios (and counts) and infusing them with business strategies, referred to as key performance indicators (KPI). Many times, KPIs deal with conversion aspects, but not always. It depends on the organization.

- Formulating online strategy: This stage is concerned with the online goals, objectives, and standards for the organization or business. These strategies are usually related to making money, saving money, or increasing market share.

Another essential function developed by the analysts for the optimization of the websites are the experiments

- Experiments and testings: A/B testing is a controlled experiment with two variants, in online settings, such as web development.

The goal of A/B testing is to identify and suggest changes to web pages that increase or maximize the effect of a statistically tested result of interest.

Each stage impacts or can impact (i.e., drives) the stage preceding or following it. So, sometimes the data that is available for collection impacts the online strategy. Other times, the online strategy affects the data collected.

Web analytics technologies

There are at least two categories of web analytics, off-site and on-site web analytics.

- Off-site web analytics refers to web measurement and analysis regardless of whether a person owns or maintains a website. It includes the measurement of a website’s potential audience (opportunity), share of voice (visibility), and buzz (comments) that is happening on the Internet as a whole.

- On-site web analytics, the more common of the two, measure a visitor’s behavior once on a specific website. This includes its drivers and conversions; for example, the degree to which different landing pages are associated with online purchases. On-site web analytics measures the performance of a specific website in a commercial context. This data is typically compared against key performance indicators for performance and is used to improve a website or marketing campaign’s audience response. Google Analytics and Adobe Analytics are the most widely used on-site web analytics service; although new tools are emerging that provide additional layers of information, including heat maps and session replay.

Historically, web analytics has been used to refer to on-site visitor measurement. However, this meaning has become blurred, mainly because vendors are producing tools that span both categories. Many different vendors provide on-site web analytics software and services. There are two main technical ways of collecting the data. The first and traditional method, server log file analysis, reads the logfiles in which the web server records file requests by browsers. The second method, page tagging, uses JavaScript embedded in the webpage to make image requests to a third-party analytics-dedicated server, whenever a webpage is rendered by a web browser or, if desired, when a mouse click occurs. Both collect data that can be processed to produce web traffic reports.

for example :

The most popular web analytics tool is Google Analytics, although there are many others on the market offering specialized information such as real-time activity or heat mapping.

The following are some of the most commonly used tools:

- Google Analytics — the ‘standard’ website analytics tool, free and widely used

- Piwik — an open-source solution similar in functionality to Google and a popular alternative, allowing companies full ownership and control of their data

- Adobe Analytics — highly customizable analytics platform (Adobe bought analytics leader Omniture in 2009)

- Kissmetrics — can zero in on individual behavior, i.e. cohort analysis, conversion and retention at the segment or individual level

- Mixpanel — advanced mobile and web analytics that measure actions rather than pageviews

- Parse.ly — offers detailed real-time analytics, specifically for publishers

- CrazyEgg — measures which parts of the page are getting the most attention using ‘heat mapping’

With a wide variety of analytics tools on the market, the right vendors for your company’s needs will depend on your specific requirements. Luckily, Optimizely integrates with most of the leading platforms to simplify your data analysis.

A/B Testing

Ubisoft used A/B testing to increase its lead generation by 12%

Ubisoft Entertainment is one of the leading French video game companies. It’s most known for publishing games for several highly renowned video game franchises such as For Honor, Tom Clancy’s, Assassin’s Creed, Just Dance, etc., and delivering memorable gaming experiences.

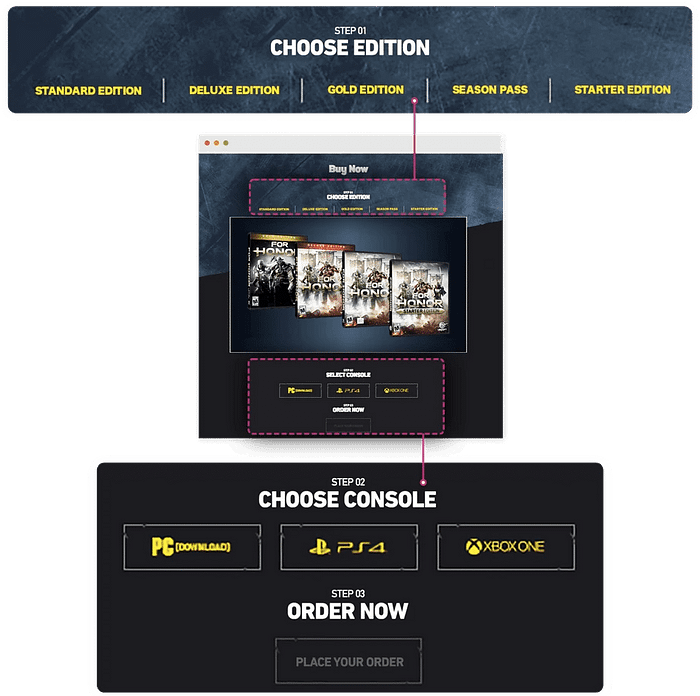

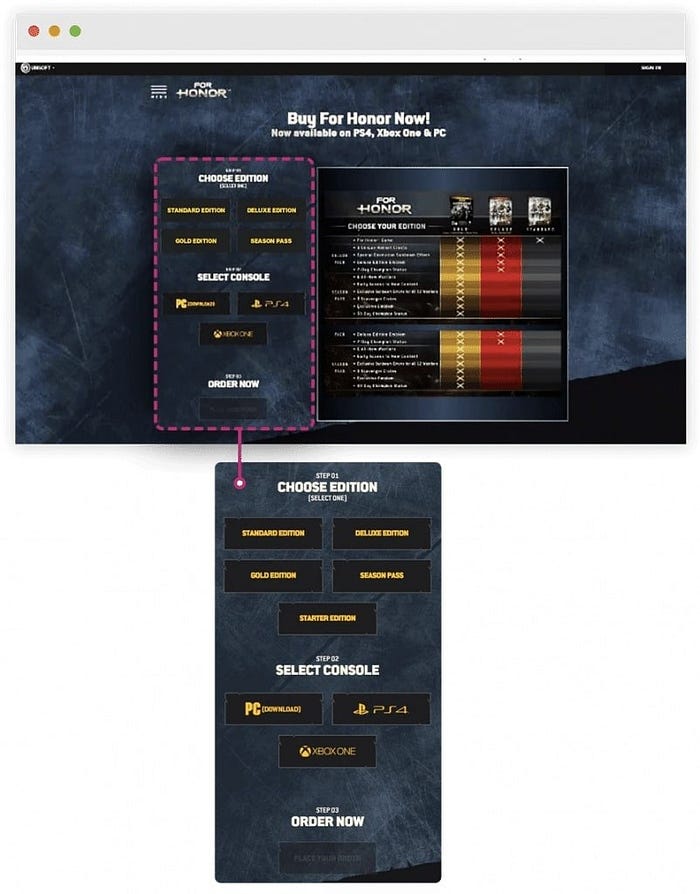

For Ubisoft, lead generation and conversion rate are two key metrics to analyze its overall performance. While some of its pages were performing well in terms of lead generation and conversion rate, its ‘Buy Now’ page dedicated to the ‘For Honor’ brand wasn’t yielding the best of results.

Ubisoft’s team investigated the matter, collected visitor data using clickmaps, scrollmaps, heatmaps, and surveys, and analyzed that their buying process was too tedious. The company decided to overhaul For Honor’s Buy Now page completely — reduce the up and down page scroll and simplify the entire buying process.

Here’s now the control and variation looked like:

After running the test for about three months, Ubisoft saw that variation brought about more conversions to the company than the control. Conversions went up from 38% to 50%, and overall lead generation increased by 12%.

Predictive models

In short, predictive modeling is a statistical technique using machine learning and data mining to predict and forecast likely future outcomes with the aid of historical and existing data. It works by analyzing current and historical data and projecting what it learns on a model generated to forecast likely outcomes. Predictive modeling can be used to predict just about anything, from TV ratings and a customer’s next purchase to credit risks and corporate earnings.

A predictive model is not fixed; it is validated or revised regularly to incorporate changes in the underlying data. In other words, it’s not a one-and-done prediction. Predictive models make assumptions based on what has happened in the past and what is happening now. If incoming, new data shows changes in what is happening now, the impact on the likely future outcome must be recalculated, too. For example, a software company could model historical sales data against marketing expenditures across multiple regions to create a model for future revenue based on the impact of the marketing spend.

Most predictive models work fast and often complete their calculations in real time. That’s why banks and retailers can, for example, calculate the risk of an online mortgage or credit card application and accept or decline the request almost instantly based on that prediction.

Some predictive models are more complex, such as those used in computational biology and quantum computing; the resulting outputs take longer to compute than a credit card application but are done much more quickly than was possible in the past thanks to advances in technological capabilities, including computing power.

Thank you !

References :